33 lines

2.5 KiB

Plaintext

33 lines

2.5 KiB

Plaintext

import { Callout } from 'nextra/components';

|

|

|

|

# Multi-Model Service Provider Support

|

|

|

|

<Image

|

|

alt={'Multi-Model Service Provider Support'}

|

|

src={'https://github.com/lobehub/lobe-chat/assets/28616219/b164bc54-8ba2-4c1e-b2f2-f4d7f7e7a551'}

|

|

cover

|

|

/>

|

|

|

|

<Callout>Available in version 0.123.0 and later</Callout>

|

|

|

|

In the continuous development of LobeChat, we deeply understand the importance of diversity in model service providers for meeting the needs of the community when providing AI conversation services. Therefore, we have expanded our support to multiple model service providers, rather than being limited to a single one, in order to offer users a more diverse and rich selection of conversations.

|

|

|

|

In this way, LobeChat can more flexibly adapt to the needs of different users, while also providing developers with a wider range of choices.

|

|

|

|

## Supported Model Service Providers

|

|

|

|

We have implemented support for the following model service providers:

|

|

|

|

- **AWS Bedrock**: Integrated with AWS Bedrock service, supporting models such as **Claude / LLama2**, providing powerful natural language processing capabilities. [Learn more](https://aws.amazon.com/cn/bedrock)

|

|

- **Google AI (Gemini Pro, Gemini Vision)**: Access to Google's **Gemini** series models, including Gemini and Gemini Pro, to support advanced language understanding and generation. [Learn more](https://deepmind.google/technologies/gemini/)

|

|

- **ChatGLM**: Added the **ChatGLM** series models from Zhipuai (GLM-4/GLM-4-vision/GLM-3-turbo), providing users with another efficient conversation model choice. [Learn more](https://www.zhipuai.cn/)

|

|

- **Moonshot AI (Dark Side of the Moon)**: Integrated with the Moonshot series models, an innovative AI startup from China, aiming to provide deeper conversation understanding. [Learn more](https://www.moonshot.cn/)

|

|

|

|

At the same time, we are also planning to support more model service providers, such as Replicate and Perplexity, to further enrich our service provider library. If you would like LobeChat to support your favorite service provider, feel free to join our [community discussion](https://github.com/lobehub/lobe-chat/discussions/1284).

|

|

|

|

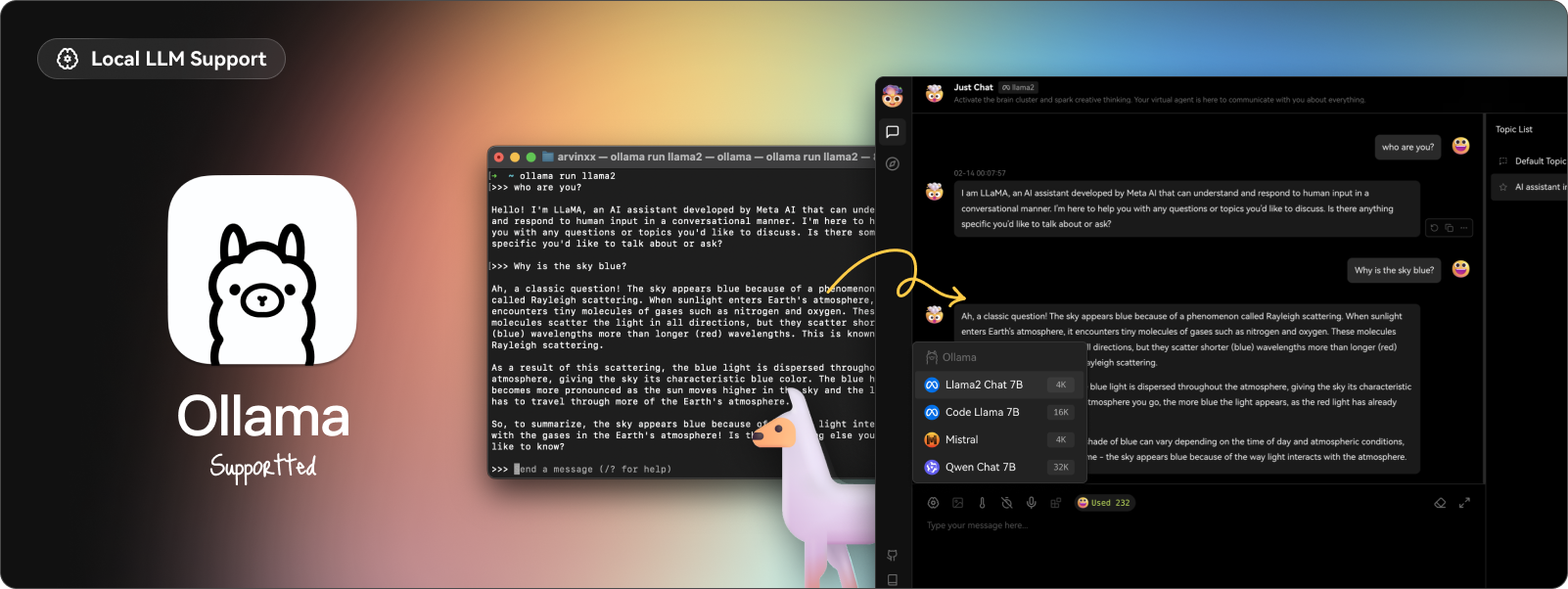

## Local Model Support

|

|

|

|

|

|

|

|

To meet the specific needs of users, LobeChat also supports the use of local models based on [Ollama](https://ollama.ai), allowing users to flexibly use their own or third-party models. For more details, see [Local Model Support](/zh/features/local-llm).

|